Of using Common Crawl to play Family Feud

Family feud meets Big Data

When I was working at Exalead, I had the chance to have access to a 16 billions pages search engine to play with.

During a hackathon, I plugged together Exalead’s search engine with a nifty python package called pattern,

and a word cloud generator.

Pattern allows you to define phrase patterns and extract the text matching a specific placeholders.

I packaged it with a straightforward GUI and presented the demo as a big data driven family feud.

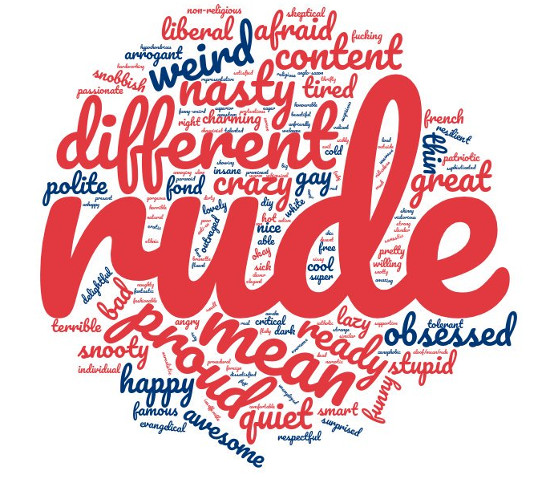

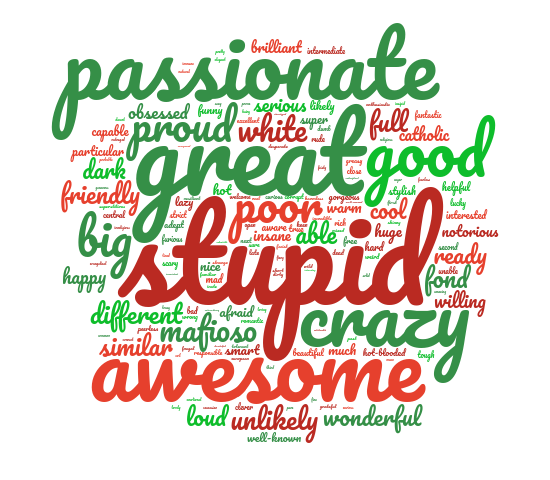

To answer a question like, “Which adjectives are stereotypically associated with French people?”, one would simply enter

French people are <adjective>

The app would run the phrase query "French people are" on the search engine, stream the results to a short python program that would then try and find adjectives coming right after the phrase. The app would then display the results as a world cloud as follows.

I wondered how much it would cost me to try and reproduce this demo nowadays. Exalead is a company with hundreds of servers to back this search engine. Obviously I’m on a tighter budget.

I happen to develop a search engine library in Rust called tantivy. Indexing common-crawl would be a great way to test it, and a cool way to slap a well-deserved sarcastic webscale label on it.

Well so far, I indexed a bit more than 25% of it, and indexing it entirely should cost me less than $400. Let me explain how I did it. If you are impatient, just scroll down, you’ll be able to see colorful pictures, I promise.

Common Crawl

Common Crawl is one of my favorite open datasets. It consists in 3.2 billions pages crawled from the web. Of course, 3 billions is far from exhaustive. The web contains hundreds of trillions of webpages, and most of it is unindexed.

It would be interesting to compare this figure to recent search engines to give us some frame of reference. Unfortunately Google and Bing are very secretive about the number of web pages they index.

We have some figure about the past: In 2000, Google reached its first billion indexed web pages. In 2012, Yandex -the leading russian search engine- grew from 4 billions to tens of billions web pages.

3 billions pages indexed might have been enough to compete in the global search engine market in 2002.

Nothing to sneeze as really.

The Common Crawl website lists example projects . That kind of dataset is typically useful to mine for facts or linguistics. It can be helpful to train train a language model for instance, or try to create a list of companies in a specific industry for instance.

As far as I know, all of these projects are batching Common Crawl’s data. Since it sits conveniently on Amazon S3, it is possible to grep through it with EC2 instances for the price of a sandwich.

As far as I know, nobody actually indexed Common Crawl so far. A opensource project called Common Search had the ambitious plan to make a public search engine out of it using elasticsearch. It seems inactive today unfortunately. I would assume it lacked financial support to cover server costs. That kind of project would require a bare minimum of 40 server relatively high spec servers.

My initial plan and back of the envelope computations

Since the data is conveniently sitting on Amazon S3 as part of Amazon’s public dataset program, I naturally first considered indexing everything on EC2.

Let’s see how much that would have cost.

Since I focus on the documents containing English text, we can bring the 3.2 billions documents down to roughly 2.15 billions.

Common Crawl conveniently distributes so-called WET files that contains the text extracted from the HTML markup of the page. The data is split into 80,000 WET files of roughly 115MB each, amounting overall to 9TB GZipped data, and somewhere around 17TB uncompressed.

We can shard our index into 80 shards including 1,000 WET files each.

To reproduce the family Feud demo, we will need to access the original text of the matched documents. For convenience, Tantivy makes this possible by defining our fields as STORED in our schema.

Tantivy’s docstore compresses the data using LZ4 compression. After We typically get an inverse compression rate of 0.6 on natural language (by which I mean you compressed file is 60% the size of your original data). The inverted index on the other hand, with positions, takes around 40% of the size of the uncompressed text. We should therefore expect our index, including the stored data, to be roughly equal to 17TB as well.

Indexing cost should not be an issue. Tantivy is already quite fast at indexing. Indexing wikipedia (8GB) even with stemming enabled and including stored data typically takes around 10mn on my recently acquired Dell XPS 13 laptop. We might want larger segments for Common-crawl, so maybe we should take a large margin and consider that a cheap t2.medium (2 vCPU) instance can index index 1GB of text in 3mn? Our 17TB would require an overall 875 hours to index on instances that cost $0.05. The problem is extremely easy to distribute over 80 instances, each of them in charge of 1000 WET files for instance. The whole operation should cost us less than 50 bucks. Not bad…

But where do we store this 17B index ? Should we upload all of these shards to S3. Then when we eventually want to query it, start many instances, have them download their respective set of shards and start up a search engine instance? That’s sounds extremely expensive, and would require a very high start up time.

Interestingly, search engines are designed so that an individual query actually requires as litte IO as possible.

My initial plan was therefore to leave the index on Amazon S3, and query the data directly from there. Tantivy abstracts file accesses via a Directory trait. Maybe it would be a good solution to have some kind of S3 directory that downloads specific slices of files while queries are being run?

How would that go?

The default dictionary in tantivy is based on a finite state transduce implementation : the excellent fst crate.

This is not ideal here, as accessing a key requires quite a few random accesses. When hitting S3, the cost of random accesses is magnified. We should expect 100ms of latency for each read. The API allows to ask for several ranges at once,

but since we have no idea where the subsequent jumps will be, all of these reads will end up being sequential. Looking up a single keyword in our dictionary may end up taking close to a second.

Fortunately tantivy has an undocumented alternative dictionary format that should help us here.

Another problem is that files are accessed via a ReadOnlySource struct.

Currently, the only real directory relies on Mmap, so throughout the code, tantivy relies heavily on the OS paging data for us, and liberally request for huge slices of data. We will therefore also need to go through all lines of code that access data, and only request the amount of data that is needed. Alternatively we could try and hack a solution around

libsigsegv, but really this sounds dangerous, and might not be worth the artistic points.

Well, overall this sounds like a quite a bit of work, but which may result in valuable features for tantivy.

Oh by the way, what is the cost of simply storing this data in S3 ?

Well after checking the Amazon S3 pricing details, just storing our 17TB data will cost us around 400 USD per month. Ouch. Call me cheap… I know many people have more expensive hobbies but that’s still too much money for me!

The most important cost of indexing this on EC2/S3 would have been the storage of the index. Around 400 USD per month.

Back to the black board!

By the way, my estimates were not too far from reality.

I did not take in account the WET file headers, that ends up being thrown away. Also, some of the document which passed our English language detector

are multilingual. The tokenizer is configured to discard all tokens that do not contain exclusively characters in [a-zA-Z0-9].

In the end, one shard takes 165 GB, so the overall size of the index would te 13.2 TB.

Indexing Common Crawl for less than a dinner at a 2-star Michelin Restaurant

What’s great with back of the envelope computations is that they actually help you reconsider solutions that you unconsciously ruled out by “common sense”. What about indexing the whole thing on my desktop computer… Downloading the whole thing using my private internet connnection. Is this ridiculous?

Think about it, a 4TB hard drive nowadays on amazon Japan cost around 85 dollars. I could buy three or four of these and store the index there. The 8ms-10ms random seek latency will be actually much more comfortable than the S3 solution. That would cost me around $255, which is around the cost of dinner at a 2-star Michelin restaurant.

What about CPU time and download time ? Well my internet connection seems to be able to download shards at a relatively stable 3MB/s to 4MB/s. 9TB will probably take 830 hours or 34 days. I can probably wait. Once again, indexing at this speed is really not a problem.

In fact, my bandwidth is only fast enough to keep two indexing threads busy, leaving me plenty of CPU to watch netflix and code. On my laptop, 1 thread would probably be ok. Explicitely limiting the number of threads has the positive side effect of allocating more RAM to each segment being indexed. As a result, new segments produced are larger and less merging work is needed.

So I randomly partitioned the 80,000 WET files into 80 shards of 10,000 files each. I then started indexing these shards sequentially. For each shard, after having indexed all documents, I force-merge all of the segments into a single very large segment.

I’m not gonna lie to you. I haven’t indexed Common-Crawl entirely yet. I only bought one 4TB hard disk, and indexed 21 shards (26%). Indexing is in a iatus at this point, because I have been quite busy recently (see the personal news below). Shards are independent : the feasibility of indexing Common-Crawl entirely on one machine is proven at this point. Finishing the job is only a matter of throwing time and money.

Resuming

I recently bought a house in Tokyo and the power installation was not too really suited with morning routine : dishwaser, heater and kettle was apparently too much and our fuses blew half of dozen of times.

This was a very nice test for tantivy’s ability to avoid data corruption and resume indexing under a a black out scenario. In order to make it easier to keep track of the progress of indexing and resume from the right position, tantivy 0.5.0 now makes it possible to embed a small payload with every commit. For common-crawl, I commit after every 10 WET files. The payload is the last WET filename that got indexed.

Reproducing it at home

On the off chance indexing Common-Crawl might interest businesses, academics or you, I made the code I used to download and index common-crawl available here.

The README file explains how to install and run the indexer part.

It’s fairly well package.

You can then query each shard individually using tantivy-cli.

For instance, the search command will stream documents matching a given query. You just need to pass it a shard directory and a query. Its speed will be dominated limited by your IO, so if you have more than one disc, you can speed up the results by spreading shards over different shards and query them in parallel.

For instance, running the following command

tantivy search -i my_index/shard_01 --query "\"I like\""

will output all the documents containing the phrase "I like", in a json format, one document per-line, in no specific order.

Demo time !

I wrote a small python script that reproduces the “family feud” demo. The script just outputs the data and the tag cloud are actually create manually on wordclouds.com Here are a few results.

The useful stuff

First, we can use this to understand stereotypes.

At Indeed, I had to work a lot with domain specific vocabulary.

Jobseeker might search for an RN or an LVN job for instance.

These acronyms were very obscure for me and other most non-native speakers.

If I search for RN stands for, I get the following results

registered nurse

retired nuisance

staff badges

registered nurses

the series code

registered nurse

removable neck

radon

rn(i

the input vector

resort network

certified nurses

registered nut

registered nurse

registered nurse

registered identification number

registered nurse

registered nurses

I got my answer: users searching for RN meant registered nurse. For LVN, the results are similar :

license occupation nurse

registered nurse

license occupation nurse

license occupation nurse

sorry

license occupation nurse

registered nurse

the cause

license occupation nurse

licensed vocational nurse

licensed vocational nursing

license occupation nurse

license occupation nurse

license occupation nurse

LVN stands for licensed vocational nurse.

Boostrapping dictionaries

It’s often handy for prototyping to bootstrap rapidly a dictionary. For instance, I might need rapidly a list of job titles. A fairly non-ambiguous pattern would be

I work as a <noun phrase>

If I run this pattern on my index, I get these 5,000 unique jobtitles

This is not perfect but this pretty good value considering how little was required.

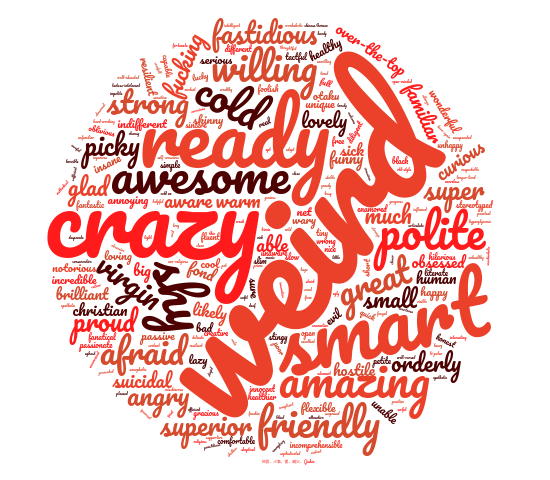

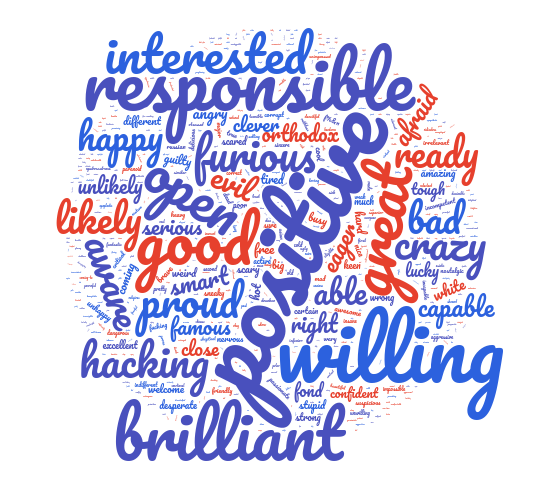

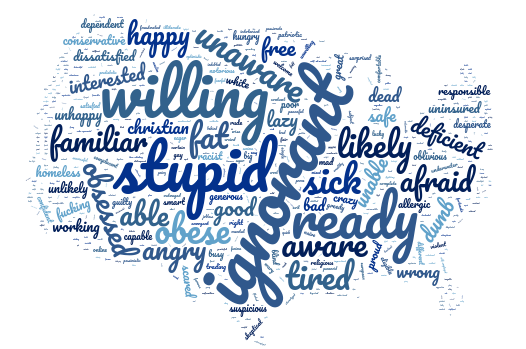

National stereotypes

Here are the results for different nationalities. Hopefully useless disclaimer: This is measuring stereotypes, not actual fact.

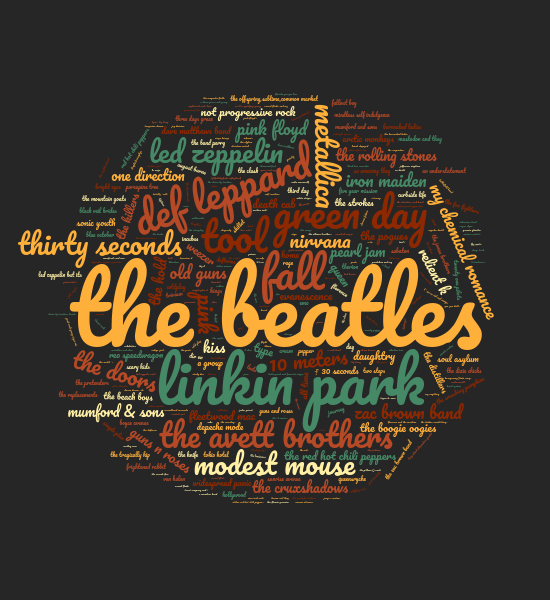

People’s favorite?

One can also try to extract noun phrases instead of a single word. For instance,

here is a list of people’s favorite thing. For instance, the first tag cloud was generated using the pattern: my favorite city is <noun phrase>.

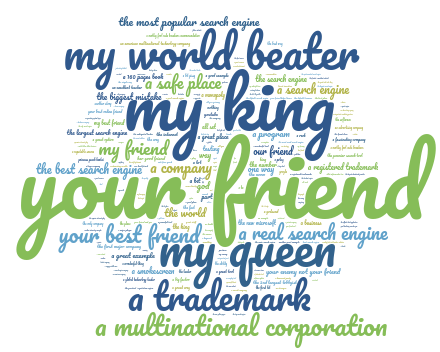

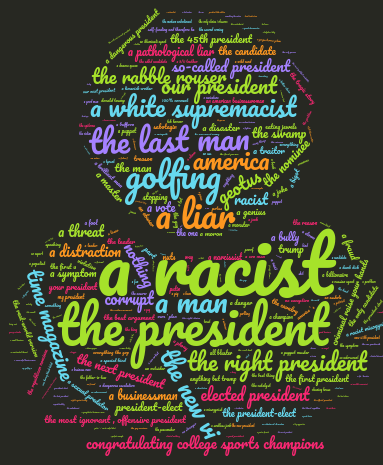

What’s Google? What’s Trump?

It’s also fun to search for the noun phrases associated to something.

For instance, here is what is said about Google.

… or what is said about Donald Trump

A couple of personal news.

You may have noticed I haven’t blogged for a while. In the last few months, I crossed a lot of very interesting things I wanted to blog about but I preferred to allocate my spare time on the development tantivy. 0.5.0 was a pretty big milestone. It includes a lot of query-time performance improvement, faceting, range queries, and more… Development has been going full steam recently and tantivy is getting rapidly close to becoming a decent alternative to Lucene.

I am unfortunately pretty sure I won’t be able to keep up the nice pace.

First, my daughter just got born! I don’t expect to have much time to work on tantivy or blog for quite a while.

Second, I will join Google Tokyo in April. I expect it will this new position to nurture my imposter syndrome. Besides, starting a new job usually bring its bit of overhead to get used to the new position / development environment. The next year will be very busy for me !

By the way, I will travel to Mountain View in May, for Google Orientation. If you know about some interesting events in San Francisco or Mountain View during that period, please let me know!